A new initiative to better identify child predators who obscure their activity by jumping among tech platforms was announced Tuesday by The Tech Coalition, an industry group that includes Discord, Google, Mega, Meta, Quora, Roblox, Snap, and Twitch.

The initiative, called Lantern, allows companies in the coalition to share information about potential child sexual exploitation, which will increase their prevention and detection capabilities, speed up the identification of threats, build situational awareness of new predatory tactics, and strengthen reporting to authorities of criminal offenses.

In a posting on the coalition’s website, Executive Director John Litton explained that online child sexual exploitation and abuse are pervasive threats that can cross various platforms and services.

Two of the most pressing dangers today are inappropriate sexualized contact with a child, referred to as online grooming, and financial sextortion of young people, he continued.

“To carry out this abuse, predators often first connect with young people on public forums, posing as peers or friendly new connections,” he wrote. “They then direct their victims to private chats and different platforms to solicit and share child sexual abuse material (CSAM) or coerce payments by threatening to share intimate images with others.”

“Because this activity spans across platforms, in many cases, any one company can only see a fragment of the harm facing a victim,” he noted. “To uncover the full picture and take proper action, companies need to work together.”

Gathering Signals To Combat Child Exploitation

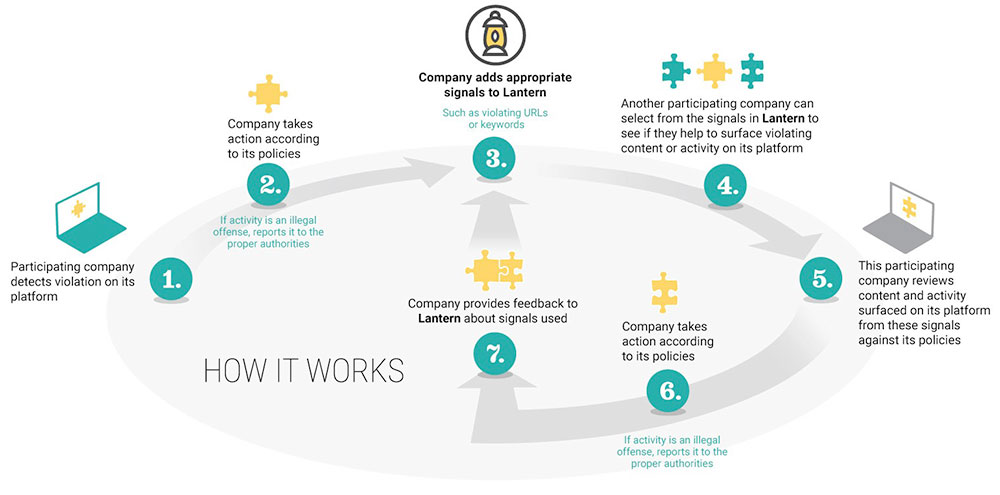

Here’s how the Lantern program works:

- Participating companies upload “signals” to Lantern about activity that violates their policies against child sexual exploitation identified on their platform.

- Signals can be information tied to policy-violating accounts like email addresses, usernames, CSAM hashes, or keywords used to groom as well as buy and sell CSAM. Signals are not definitive proof of abuse. They offer clues for further investigation and can be the crucial piece of the puzzle that enables a company to uncover a real-time threat to a child’s safety.

- Once signals are uploaded to Lantern, participating companies can select them, run them against their platform, review any activity and content the signal surfaces against their respective platform policies and terms of service, and take action in line with their enforcement processes, such as removing an account and reporting criminal activity to the National Center for Missing and Exploited Children and appropriate law enforcement agency.

How the Lantern Child Safety Signal Sharing Program works (Infographic Credit: The Tech Coalition)

“Until now, no consistent procedure existed for companies to collaborate against predatory actors evading detection across services,” Litton wrote. “Lantern fills this gap and shines a light on cross-platform attempts at online child sexual exploitation and abuse, helping to make the internet safer for kids.”

Significance of the Lantern Initiative

“This initiative holds immense significance in forging a path towards industry-wide collaboration to combat child sexual exploitation and abuse,” observed Alexandra Popken, vice president of trust and safety at WebPurify, a cloud-based web filtering and online child protection service in Irvine, Calif.

“Each platform faces its unique set of challenges, whether related to knowledge, tools, or resources, in addressing the escalating issue of CSAM,” she told TechNewsWorld. “Lantern symbolizes a unity among platforms in combating this issue and offers the practical infrastructure needed to pull it off.”

Lantern builds on the existing work of tech companies sharing information with law enforcement, added Ashley Johnson, a policy analyst with the Information Technology and Innovation Foundation, a research and public policy organization in Washington, D.C.

“Hopefully, we will see this kind of collaboration for other purposes,” she told TechNewsWorld. “I can see something like this being useful for combating terrorist content, as well, but I think online chat sexual abuse is a great place to start with this kind of information sharing.”

Popken explained that malicious actors weaponize platforms through a range of tactics, employing various strategies to evade detection.

“In the past, platforms were hesitant to signal-share as it would imply an admission of exploitation,” she said. “However, initiatives like this demonstrate a shift in mindset, recognizing that cross-platform sharing ultimately enhances collective security and safeguards users’ well-being.”

Tracking Platform Nomads

Online predators use multiple platforms to contact and groom minors, meaning each social network only sees a portion of the predators’ evil actions, explained Chris Hauk, a consumer privacy champion at Pixel Privacy, a publisher of consumer security and privacy guides.

“Sharing information among the networks means the social platforms will be better armed with information to detect such actions,” he continued.

“Currently, when a predator is shut down on one app or website, they simply move on to another platform,” he said. “By sharing information, social networks can work to put a stop to this type of activity.”

Johnson explained that in cases of online grooming, it’s very common for perpetrators to have their victims move their communication off one site and onto another.

“A predator may suggest moving to another platform for privacy reasons or because it has fewer parental controls,” she said. “Having communication to track that activity across platforms is extremely important.”

Responsible Data Management in Child Safety Efforts

Lantern’s potential to speed up the identification of threats to children is a critical aspect of the program. “If data uploaded to Lantern can be scanned against other platforms in real time, auto-rejecting or surfacing content for review, that represents meaningful progress in enforcing this problem at scale,” Popken noted.

Litton pointed out in his posting that during the two years it has taken to develop Lantern, the coalition has not only designed the program to be effective against online child sexual exploitation and abuse but also to be managed responsibly via:

- Respect for human rights by having the program subjected to a Human Rights Impact Assessment (HRIA) by the Business for Social Responsibility, which will also offer ongoing guidance as the initiative evolves.

- Soliciting stakeholder engagement by asking more than 25 experts and organizations focused on child safety, digital rights, advocacy of marginalized communities, government, and law enforcement for feedback and inviting them to participate in the HRIA.

- Promote transparency by including Lantern in The Tech Coalition’s annual transparency report and providing participating companies with recommendations on how to incorporate their participation in the program into their transparency reporting.

- Designing Lantern with safety and privacy in mind.

Importance of Privacy in Child Protection Measures

“Any data sharing requires privacy to be top of mind, especially when you’re dealing with information about children because they’re a vulnerable population,” Johnson said.

“It is important for the companies that take part in this to protect the identities of the children involved and protect their data and information from falling into the wrong hands,” she continued.

“Based on what we’ve seen from tech companies,” she said. “They’ve done a pretty good job of protecting victims’ privacy, so hopefully they’ll be able to keep that up.”

However, Paul Bischoff, privacy advocate at Comparitech, a reviews, advice, and information website for consumer security products, cautioned, “Lantern won’t be perfect.”

“An innocent person,” he told TechNewsWorld, “could unwittingly trigger a ‘signal’ that spreads information about them to other social networks.”

Comprehensive Overview on Combating Online Grooming

The Tech Coalition has published a research paper titled “Considerations for Detection, Response, and Prevention of Online Grooming” to shed light on the complexities of online grooming and outline the collective measures being undertaken by the technology sector.

Intended solely for educational purposes, this document delves into established protocols and the industry’s ongoing efforts to prevent and reduce the impact of such predatory behavior.

The Tech Coalition offers this paper as a direct download, with no registration or form submission required.